Perform Extractive and Abstractive Summarization

The rental property app maintained by Margie’s Travel provides a way for property managers to describe rental listings. Many of the descriptions in the system are long, giving many details about the rental property, its neighborhood, and local attractions, stores, and other amenities. A feature that has been requested as you implement new AI-powered capabilities for the app is using generative AI to create concise summaries of these descriptions, making it easier for your users to review properties quickly. In this exercise, you use the azure_ai extension in an Azure Database For PostgreSQL flexible server to perform abstractive and extractive summarization on rental property descriptions and compare the resulting summaries.

Before you start

You need an Azure subscription with administrative rights.

Deploy resources into your Azure subscription

This step guides you through using Azure CLI commands from the Azure Cloud Shell to create a resource group and run a Bicep script to deploy the Azure services necessary for completing this exercise into your Azure subscription.

-

Open a web browser and navigate to the Azure portal.

-

Select the Cloud Shell icon in the Azure portal toolbar to open a new Cloud Shell pane at the bottom of your browser window.

If prompted, select the required options to open a Bash shell. If you have previously used a PowerShell console, switch it to a Bash shell.

-

At the Cloud Shell prompt, enter the following to clone the GitHub repo containing exercise resources:

git clone https://github.com/MicrosoftLearning/mslearn-postgresql.git -

Next, you run three commands to define variables to reduce redundant typing when using Azure CLI commands to create Azure resources. The variables represent the name to assign to your resource group (

RG_NAME), the Azure region (REGION) into which resources will be deployed, and a randomly generated password for the PostgreSQL administrator login (ADMIN_PASSWORD).In the first command, the region assigned to the corresponding variable is

eastus, but you can also replace it with a location of your preference. However, if replacing the default, you must select another Azure region that supports abstractive summarization to ensure you can complete all of the tasks in the modules in this learning path.REGION=eastusThe following command assigns the name to be used for the resource group that will house all the resources used in this exercise. The resource group name assigned to the corresponding variable is

rg-learn-postgresql-ai-$REGION, where$REGIONis the location you specified above. However, you can change it to any other resource group name that suits your preference.RG_NAME=rg-learn-postgresql-ai-$REGIONThe final command randomly generates a password for the PostgreSQL admin login. Make sure you copy it to a safe place to use later to connect to your PostgreSQL flexible server.

a=() for i in {a..z} {A..Z} {0..9}; do a[$RANDOM]=$i done ADMIN_PASSWORD=$(IFS=; echo "${a[*]::18}") echo "Your randomly generated PostgreSQL admin user's password is:" echo $ADMIN_PASSWORD -

If you have access to more than one Azure subscription, and your default subscription is not the one in which you want to create the resource group and other resources for this exercise, run this command to set the appropriate subscription, replacing the

<subscriptionName|subscriptionId>token with either the name or ID of the subscription you want to use:az account set --subscription <subscriptionName|subscriptionId> -

Run the following Azure CLI command to create your resource group:

az group create --name $RG_NAME --location $REGION -

Finally, use the Azure CLI to execute a Bicep deployment script to provision Azure resources in your resource group:

az deployment group create --resource-group $RG_NAME --template-file "mslearn-postgresql/Allfiles/Labs/Shared/deploy.bicep" --parameters restore=false adminLogin=pgAdmin adminLoginPassword=$ADMIN_PASSWORDThe Bicep deployment script provisions the Azure services required to complete this exercise into your resource group. The resources deployed include an Azure Database for PostgreSQL flexible server, Azure OpenAI, and an Azure AI Language service. The Bicep script also performs some configuration steps, such as adding the

azure_aiandvectorextensions to the PostgreSQL server’s allowlist (via the azure.extensions server parameter), creating a database namedrentalson the server, and adding a deployment namedembeddingusing thetext-embedding-ada-002model to your Azure OpenAI service. Note that the Bicep file is shared by all modules in this learning path, so you may only use some of the deployed resources in some exercises.The deployment typically takes several minutes to complete. You can monitor it from the Cloud Shell or navigate to the Deployments page for the resource group you created above and observe the deployment progress there.

-

Close the Cloud Shell pane once your resource deployment is complete.

Troubleshooting deployment errors

You may encounter a few errors when running the Bicep deployment script.

-

If you previously ran the Bicep deployment script for this learning path and subsequently deleted the resources, you may receive an error message like the following if you are attempting to rerun the script within 48 hours of deleting the resources:

{"code": "InvalidTemplateDeployment", "message": "The template deployment 'deploy' is not valid according to the validation procedure. The tracking id is '4e87a33d-a0ac-4aec-88d8-177b04c1d752'. See inner errors for details."} Inner Errors: {"code": "FlagMustBeSetForRestore", "message": "An existing resource with ID '/subscriptions/{subscriptionId}/resourceGroups/rg-learn-postgresql-ai-eastus/providers/Microsoft.CognitiveServices/accounts/{accountName}' has been soft-deleted. To restore the resource, you must specify 'restore' to be 'true' in the property. If you don't want to restore existing resource, please purge it first."}If you receive this message, modify the

azure deployment group createcommand above to set therestoreparameter equal totrueand rerun it. -

If the selected region is restricted from provisioning specific resources, you must set the

REGIONvariable to a different location and rerun the commands to create the resource group and run the Bicep deployment script.{"status":"Failed","error":{"code":"DeploymentFailed","target":"/subscriptions/{subscriptionId}/resourceGroups/{resourceGrouName}/providers/Microsoft.Resources/deployments/{deploymentName}","message":"At least one resource deployment operation failed. Please list deployment operations for details. Please see https://aka.ms/arm-deployment-operations for usage details.","details":[{"code":"ResourceDeploymentFailure","target":"/subscriptions/{subscriptionId}/resourceGroups/{resourceGrouName}/providers/Microsoft.DBforPostgreSQL/flexibleServers/{serverName}","message":"The resource write operation failed to complete successfully, because it reached terminal provisioning state 'Failed'.","details":[{"code":"RegionIsOfferRestricted","message":"Subscriptions are restricted from provisioning in this region. Please choose a different region. For exceptions to this rule please open a support request with Issue type of 'Service and subscription limits'. See https://review.learn.microsoft.com/en-us/azure/postgresql/flexible-server/how-to-request-quota-increase for more details."}]}]}} -

If the script is unable to create an AI resource due to the requirement to accept the responsible AI agreement, you may experience the following error; in which case use the Azure Portal user interface to create an Azure AI Services resource, and then re-run the deployment script.

{"code": "InvalidTemplateDeployment", "message": "The template deployment 'deploy' is not valid according to the validation procedure. The tracking id is 'f8412edb-6386-4192-a22f-43557a51ea5f'. See inner errors for details."} Inner Errors: {"code": "ResourceKindRequireAcceptTerms", "message": "This subscription cannot create TextAnalytics until you agree to Responsible AI terms for this resource. You can agree to Responsible AI terms by creating a resource through the Azure Portal then trying again. For more detail go to https://go.microsoft.com/fwlink/?linkid=2164190"}

Connect to your database using psql in the Azure Cloud Shell

In this task, you connect to the rentals database on your Azure Database for PostgreSQL flexible server using the psql command-line utility from the Azure Cloud Shell.

-

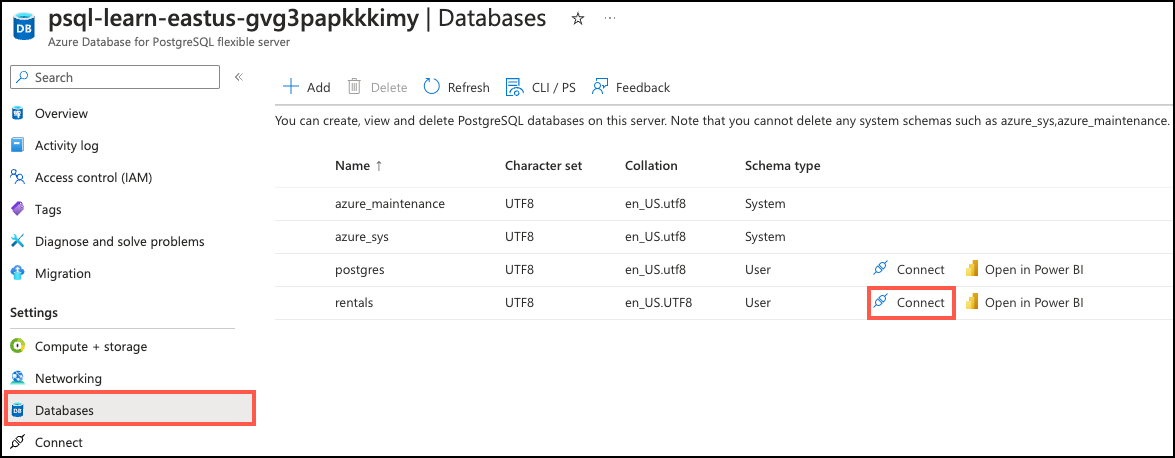

In the Azure portal, navigate to your newly created Azure Database for PostgreSQL flexible server instance.

-

In the resource menu, under Settings, select Databases select Connect for the

rentalsdatabase. Note that selecting Connect does not actually connect you to the database; it simply provides instructions for connecting to the database using various methods. Review the instructions to Connect from browser or locally and use those to connect using the Azure Cloud Shell.

-

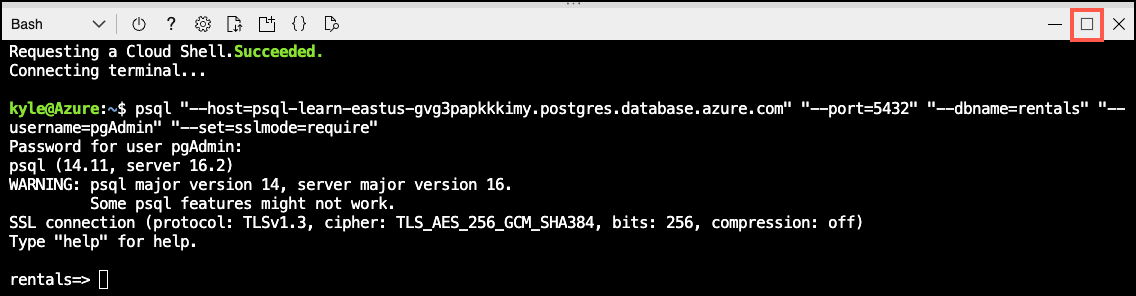

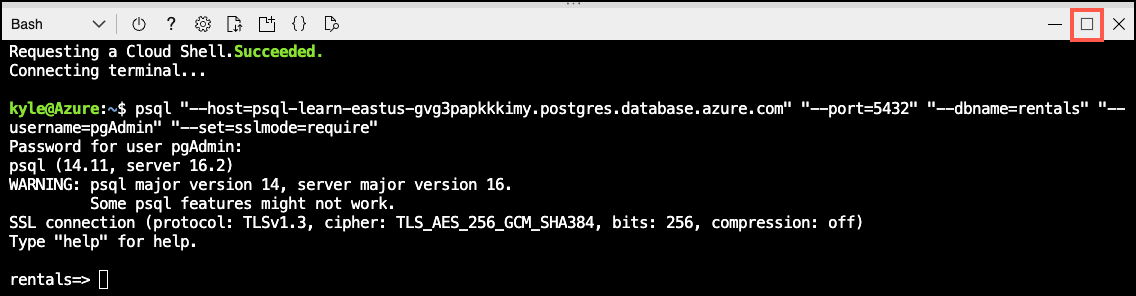

At the “Password for user pgAdmin” prompt in the Cloud Shell, enter the randomly generated password for the pgAdmin login.

Once logged in, the

psqlprompt for therentalsdatabase is displayed. -

Throughout the remainder of this exercise, you continue working in the Cloud Shell, so it may be helpful to expand the pane within your browser window by selecting the Maximize button at the top right of the pane.

Populate the database with sample data

Before you explore the azure_ai extension, add a couple of tables to the rentals database and populate them with sample data so you have information to work with as you review the extension’s functionality.

-

Run the following commands to create the

listingsandreviewstables for storing rental property listing and customer review data:DROP TABLE IF EXISTS listings; CREATE TABLE listings ( id int, name varchar(100), description text, property_type varchar(25), room_type varchar(30), price numeric, weekly_price numeric );DROP TABLE IF EXISTS reviews; CREATE TABLE reviews ( id int, listing_id int, date date, comments text ); -

Next, use the

COPYcommand to load data from CSV files into each table you created above. Start by running the following command to populate thelistingstable:\COPY listings FROM 'mslearn-postgresql/Allfiles/Labs/Shared/listings.csv' CSV HEADERThe command output should be

COPY 50, indicating that 50 rows were written into the table from the CSV file. -

Finally, run the command below to load customer reviews into the

reviewstable:\COPY reviews FROM 'mslearn-postgresql/Allfiles/Labs/Shared/reviews.csv' CSV HEADERThe command output should be

COPY 354, indicating that 354 rows were written into the table from the CSV file.

Install and configure the azure_ai extension

Before using the azure_ai extension, you must install it into your database and configure it to connect to your Azure AI Services resources. The azure_ai extension allows you to integrate the Azure OpenAI and Azure AI Language services into your database. To enable the extension in your database, follow these steps:

-

Execute the following command at the

psqlprompt to verify that theazure_aiand thevectorextensions were successfully added to your server’s allowlist by the Bicep deployment script you ran when setting up your environment:SHOW azure.extensions;The command displays the list of extensions on the server’s allowlist. If everything was correctly installed, your output must include

azure_aiandvector, like this:azure.extensions ------------------ azure_ai,vectorBefore an extension can be installed and used in an Azure Database for PostgreSQL flexible server database, it must be added to the server’s allowlist, as described in how to use PostgreSQL extensions.

-

Now, you are ready to install the

azure_aiextension using the CREATE EXTENSION command.CREATE EXTENSION IF NOT EXISTS azure_ai;CREATE EXTENSIONloads a new extension into the database by running its script file. This script typically creates new SQL objects such as functions, data types, and schemas. An error is thrown if an extension of the same name already exists. AddingIF NOT EXISTSallows the command to execute without throwing an error if it is already installed.

Connect Your Azure AI Services Account

The Azure AI services integrations included in the azure_cognitive schema of the azure_ai extension provide a rich set of AI Language features accessible directly from the database. The text summarization capabilities are enabled through the Azure AI Language service.

-

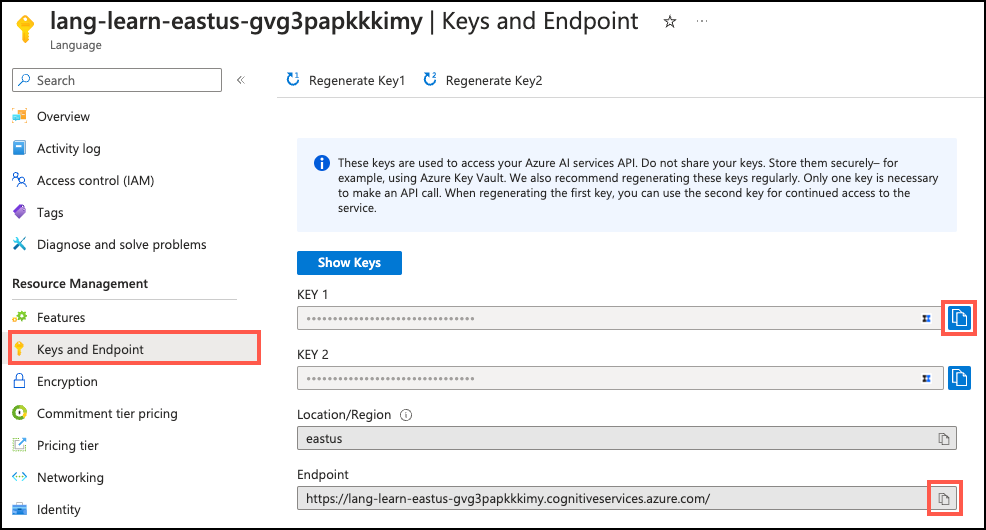

To successfully make calls against your Azure AI Language services using the

azure_aiextension, you must provide its endpoint and key to the extension. Using the same browser tab where the Cloud Shell is open, navigate to your Language service resource in the Azure portal and select the Keys and Endpoint item under Resource Management from the left-hand navigation menu.

[!Note]

If you received the message

NOTICE: extension "azure_ai" already exists, skipping CREATE EXTENSIONwhen installing theazure_aiextension above and have previously configured the extension with your Language service endpoint and key, you can use theazure_ai.get_setting()function to confirm those settings are correct, and then skip step 2 if they are. -

Copy your endpoint and access key values, then in the commands below, replace the

{endpoint}and{api-key}tokens with values you copied from the Azure portal. Run the commands from thepsqlcommand prompt in the Cloud Shell to add your values to theazure_ai.settingstable.SELECT azure_ai.set_setting('azure_cognitive.endpoint', '{endpoint}');SELECT azure_ai.set_setting('azure_cognitive.subscription_key', '{api-key}');

Review the Summarization Capabilities of the Extension

In this task, you review the two summarization functions in the azure_cognitive schema.

-

For the remainder of this exercise, you work exclusively in the Cloud Shell, so it may be helpful to expand the pane within your browser window by selecting the Maximize button at the top right of the Cloud Shell pane.

-

When working with

psqlin the Cloud Shell, enabling the extended display for query results may be helpful, as it improves the readability of output for subsequent commands. Execute the following command to allow the extended display to be automatically applied.\x auto -

The text summarization functions of the

azure_aiextension are found within theazure_cognitiveschema. For extractive summarization, use thesummarize_extractive()function. Use the\dfmeta-command to examine the function by running:\df azure_cognitive.summarize_extractiveThe meta-command output shows the function’s schema, name, result data type, and arguments. This information helps you understand how to interact with the function from your queries.

The output shows three overloads of the

summarize_extractive()function, allowing you to review their differences. TheArgument data typesproperty in the output reveals the list of arguments the three function overloads expect:Argument Type Default Description text textortext[]The text(s) for which summaries should be generated. language_text textortext[]Language code (or array of language codes) representing the language of the text to summarize. Review the list of supported languages to retrieve the necessary language codes. sentence_count integer3 The number of summary sentences to generate. sort_by text‘offset’ The sort order for the generated summary sentences. Acceptable values are “offset” and “rank,” with offset representing the start position of each extracted sentence within the original content and rank being an AI-generated indicator of how relevant a sentence is to the main idea of the content. batch_size integer25 Only for the two overload expecting an input of text[]. Specifies the number of records to process at a time.disable_service_logs booleanfalse Flag indicating whether to turn off service logs. timeout_ms integerNULL Timeout in milliseconds after which the operation is stopped. throw_on_error booleantrue Flag indicating whether the function should, on error, throw an exception resulting in a rollback of the wrapping transaction. max_attempts integer1 Number of times to retry the call to Azure AI Services in the event of a failure. retry_delay_ms integer1000 Amount of time, in milliseconds, to wait before attempting to retry calling the Azure AI Services endpoint. -

Repeat the above step, but this time run the

\dfmeta-command for theazure_cognitive.summarize_abstractive()function and review the output.The two functions have similar signatures, although

summarize_abstractive()does not have thesort_byparameter, and it returns an array oftextversus the array ofazure_cognitive.sentencecomposite types returned by thesummarize_extractive()function. This disparity has to do with the way the two different methods generate summaries. Extractive summarization identifies the most critical sentences within the text it is summarizing, ranks them, and then returns those as the summary. On the other hand, Abstractive summarization uses generative AI to create new, original sentences that summarize the text’s key points. -

It is also imperative to understand the structure of the data type that a function returns so you can correctly handle the output in your queries. To inspect the

azure_cognitive.sentencetype returned by thesummarize_extractive()function, run:\dT+ azure_cognitive.sentence -

The output of the above command reveals the

sentencetype is atuple. To examine the structure of thattupleand review the columns contained within thesentencecomposite type, execute:\d+ azure_cognitive.sentenceThe output of that command should look similar to the following:

Composite type "azure_cognitive.sentence" Column | Type | Collation | Nullable | Default | Storage | Description ------------+------------------+-----------+----------+---------+----------+------------- text | text | | | | extended | rank_score | double precision | | | | plain |The

azure_cognitive.sentenceis a composite type containing the text of an extractive sentence and a rank score for each sentence, indicating how relevant the sentence is to the text’s main topic. Document summarization ranks extracted sentences, and you can determine whether they’re returned in the order they appear or according to their rank.

Create Summaries for Property Descriptions

In this task, you use the summarize_extractive() and summarize_abstractive() functions to create concise two-sentence summaries for property descriptions.

-

Now that you have reviewed the

summarize_extractive()function and thesentiment_analysis_resultit returns, let’s put the function to use. Execute the following simple query, which performs sentiment analysis on a handful of comments in thereviewstable:SELECT id, name, description, azure_cognitive.summarize_extractive(description, 'en', 2) AS extractive_summary FROM listings WHERE id IN (1, 2);Compare the two sentences in the

extractive_summaryfield in the output to the originaldescription, noting that the sentences are not original, but extracted from thedescription. The numeric values listed after each sentence are the rank score assigned by the Language service. -

Next, perform abstractive summarization on the identical records:

SELECT id, name, description, azure_cognitive.summarize_abstractive(description, 'en', 2) AS abstractive_summary FROM listings WHERE id IN (1, 2);The extension’s abstractive summarization capabilities provide a unique, natural-language summary that encapsulates the overall intent of the original text.

If you receive an error similar to the following, you chose a region that does not support abstractive summarization when creating your Azure environment:

ERROR: azure_cognitive.summarize_abstractive: InvalidRequest: Invalid Request. InvalidParameterValue: Job task: 'AbstractiveSummarization-task' failed with validation errors: ['Invalid Request.'] InvalidRequest: Job task: 'AbstractiveSummarization-task' failed with validation error: Document abstractive summarization is not supported in the region Central US. The supported regions are North Europe, East US, West US, UK South, Southeast Asia.To be able to perform this step and complete the remaining tasks using abstractive summarization, you must create a new Azure AI Language service in one of the supported regions specified in the error message. This service can be provisioned in the same resource group you used for other lab resources. Alternatively, you may substitute extractive summarization for the remaining tasks but will not get the benefit of being able to compare the output of the two different summarization techniques.

-

Run a final query to do a side-by-side comparison of the two summarization techniques:

SELECT id, azure_cognitive.summarize_extractive(description, 'en', 2) AS extractive_summary, azure_cognitive.summarize_abstractive(description, 'en', 2) AS abstractive_summary FROM listings WHERE id IN (1, 2);By placing the generated summaries side-by-side, it is easy to compare the quality of the summaries generated by each method. For the Margie’s Travel application, abstractive summarization is the better option, providing concise summaries that deliver high-quality information in a natural and readable manner. While giving some details, the extractive summaries are more disjointed and offer less value than the original content created by abstractive summarization.

Store Description Summary in the Database

-

Run the following query to alter the

listingstable, adding a newsummarycolumn:ALTER TABLE listings ADD COLUMN summary text; -

To use generative AI to create summaries for all the existing properties in the database, it is most efficient to send in the descriptions in batches, allowing the language service to process multiple records simultaneously.

WITH batch_cte AS ( SELECT azure_cognitive.summarize_abstractive(ARRAY(SELECT description FROM listings ORDER BY id), 'en', batch_size => 25) AS summary ), summary_cte AS ( SELECT ROW_NUMBER() OVER () AS id, ARRAY_TO_STRING(summary, ',') AS summary FROM batch_cte ) UPDATE listings AS l SET summary = s.summary FROM summary_cte AS s WHERE l.id = s.id;The update statement uses two common table expressions (CTEs) to work on the data before updating the

listingstable with summaries. The first CTE (batch_cte) sends all thedescriptionvalues from thelistingstable to the Language service to generate abstractive summaries. It does this in batches of 25 records at a time. The second CTE (summary_cte) uses the ordinal position of the summaries returned by thesummarize_abstractive()function to assign each summary anidcorresponding to the record thedescriptioncame from in thelistingstable. It also uses theARRAY_TO_STRINGfunction to pull the generated summaries out of the text array (text[]) return value and convert it into a simple string. Finally, theUPDATEstatement writes the summary into thelistingstable for the associated listing. -

As a last step, run a query to view the summaries written into the

listingstable:SELECT id, name, description, summary FROM listings LIMIT 5;

Generate an AI summary of reviews for a listing

For the Margie’s Travel app, displaying a summary of all reviews for a property helps users quickly assess the overall gist of reviews.

-

Run the following query, which combines all reviews for a listing into a single string and then generates abstractive summarization over that string:

SELECT unnest(azure_cognitive.summarize_abstractive(reviews_combined, 'en')) AS review_summary FROM ( -- Combine all reviews for a listing SELECT string_agg(comments, ' ') AS reviews_combined FROM reviews WHERE listing_id = 1 );

Clean up

Once you have completed this exercise, delete the Azure resources you created. You are charged for the configured capacity, not how much the database is used. Follow these instructions to delete your resource group and all resources you created for this lab.

-

Open a web browser and navigate to the Azure portal, and on the home page, select Resource groups under Azure services.

-

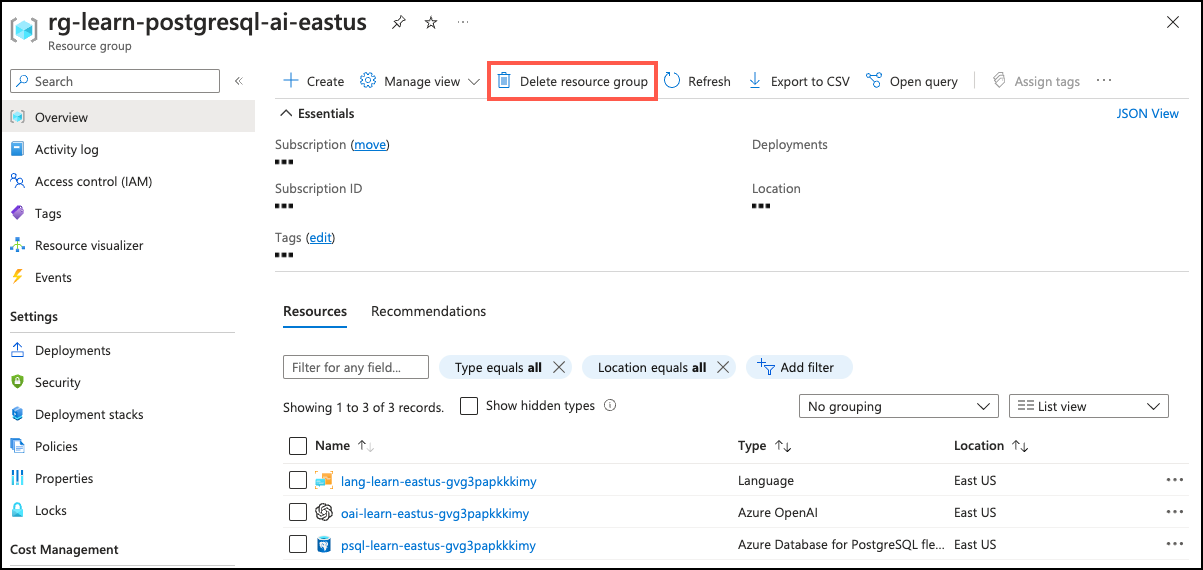

In the filter for any field search box, enter the name of the resource group you created for this lab, and then select your resource group from the list.

-

On the Overview page of your resource group, select Delete resource group.

-

In the confirmation dialog, enter the resource group name you are deleting to confirm and then select Delete.