Use Delta Lake in Azure Databricks

Delta Lake is an open source project to build a transactional data storage layer for Spark on top of a data lake. Delta Lake adds support for relational semantics for both batch and streaming data operations, and enables the creation of a Lakehouse architecture in which Apache Spark can be used to process and query data in tables that are based on underlying files in the data lake.

This lab will take approximately 30 minutes to complete.

Note: The Azure Databricks user interface is subject to continual improvement. The user interface may have changed since the instructions in this exercise were written.

Provision an Azure Databricks workspace

Tip: If you already have an Azure Databricks workspace, you can skip this procedure and use your existing workspace.

This exercise includes a script to provision a new Azure Databricks workspace. The script attempts to create a Premium tier Azure Databricks workspace resource in a region in which your Azure subscription has sufficient quota for the compute cores required in this exercise; and assumes your user account has sufficient permissions in the subscription to create an Azure Databricks workspace resource. If the script fails due to insufficient quota or permissions, you can try to create an Azure Databricks workspace interactively in the Azure portal.

- In a web browser, sign into the Azure portal at

https://portal.azure.com. -

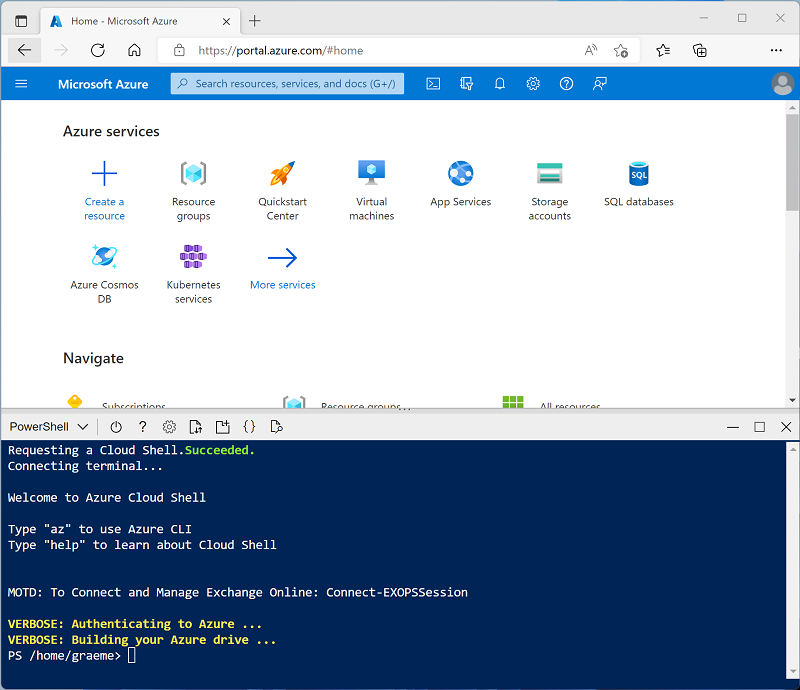

Use the [>_] button to the right of the search bar at the top of the page to create a new Cloud Shell in the Azure portal, selecting a PowerShell environment. The cloud shell provides a command line interface in a pane at the bottom of the Azure portal, as shown here:

Note: If you have previously created a cloud shell that uses a Bash environment, switch it to PowerShell.

-

Note that you can resize the cloud shell by dragging the separator bar at the top of the pane, or by using the —, ⤢, and X icons at the top right of the pane to minimize, maximize, and close the pane. For more information about using the Azure Cloud Shell, see the Azure Cloud Shell documentation.

-

In the PowerShell pane, enter the following commands to clone this repo:

rm -r mslearn-databricks -f git clone https://github.com/MicrosoftLearning/mslearn-databricks -

After the repo has been cloned, enter the following command to run the setup-serverless.ps1 script, which provisions an Azure Databricks workspace in an available region:

./mslearn-databricks/setup-serverless.ps1 -

If prompted, choose which subscription you want to use (this will only happen if you have access to multiple Azure subscriptions).

- Wait for the script to complete - this typically takes around 5 minutes, but in some cases may take longer. While you are waiting, review the Introduction to Delta Lake article in the Azure Databricks documentation.

Open the Azure Databricks Workspace

-

In the Azure portal, browse to the msl-xxxxxxx resource group that was created by the script (or the resource group containing your existing Azure Databricks workspace)

-

Select your Azure Databricks Service resource (named databricks-xxxxxxx if you used the setup script to create it).

-

In the Overview page for your workspace, use the Launch Workspace button to open your Azure Databricks workspace in a new browser tab; signing in if prompted.

Tip: As you use the Databricks Workspace portal, various tips and notifications may be displayed. Dismiss these and follow the instructions provided to complete the tasks in this exercise.

Create a notebook and ingest data

Now let’s create a Spark notebook and import the data that we’ll work with in this exercise.

-

In the sidebar, use the (+) New link to create a Notebook.

-

Change the default notebook name (Untitled Notebook [date]) to

Explore Delta Lakeand in the Connect drop-down list, select Serverless compute if it is not already selected. If the compute is not running, it may take a minute or so to start. -

In the first cell of the notebook, enter the following code, which creates a volume for storing product data.

%sql CREATE VOLUME IF NOT EXISTS product_data_volume -

Use the ▸ Run Cell menu option at the left of the cell to run it. Then wait for the Spark job run by the code to complete.

-

Under the existing code cell, use the + Code icon to add a new code cell. Then in the new cell, enter and run the following Python code.

import requests # Download the CSV file url = "https://raw.githubusercontent.com/MicrosoftLearning/mslearn-databricks/main/data/products.csv" response = requests.get(url) response.raise_for_status() # Get the current catalog catalog_name = spark.sql("SELECT current_catalog()").collect()[0][0] # Write directly to Unity Catalog volume volume_path = f"/Volumes/{catalog_name}/default/product_data_volume/products.csv" with open(volume_path, "wb") as f: f.write(response.content)This Python code downloads a CSV file containing product data from a GitHub URL and saves it directly into a Unity Catalog volume in Databricks, using the current catalog context to dynamically construct the storage path.

-

Under the existing code cell, use the + Code icon to add a new code cell. Then in the new cell, enter and run the following code to load the data from the file and view the first 10 rows.

df = spark.read.load(volume_path, format='csv', header=True) display(df.limit(10))

Load the file data into a delta table and perform an update

The data has been loaded into a dataframe. Let’s persist it into a delta table.

-

Add a new code cell and use it to run the following code:

# Create the table if it does not exist. Otherwise, replace the existing table. df.writeTo("Products").createOrReplace()The data for a delta lake table is stored in Parquet format. A log file is also created to track modifications made to the data.

-

The file data in Delta format can be loaded into a DeltaTable object, which you can use to view and update the data in the table. Run the following code in a new cell to update the data; reducing the price of product 771 by 10%.

from delta.tables import DeltaTable # Reference the Delta table in Unity Catalog deltaTable = DeltaTable.forName(spark, "Products") # Perform the update deltaTable.update( condition = "ProductID == 771", set = { "ListPrice": "ListPrice * 0.9" }) # View the updated data as a dataframe deltaTable.toDF().show(10)The update is persisted to the data in the delta folder, and will be reflected in any new dataframe loaded from that location.

Explore logging and time-travel

Data modifications are logged, enabling you to use the time-travel capabilities of Delta Lake to view previous versions of the data.

-

In a new code cell, use the following code to view the original version of the product data:

new_df = spark.read.option("versionAsOf", 0).table("Products") new_df.show(10) -

The log contains a full history of modifications to the data. Use the following code to see a record of the last 10 changes:

deltaTable.history(10).show(10, False, True)

Optimize table layout

The physical storage of table data and associated index data can be reorganized in order to reduce storage space and improve I/O efficiency when accessing the table. This is particularly useful after substantial insert, update, or delete operations on a table.

-

In a new code cell, use the following code to optimize the layout and clean up old versions of data files in the delta table:

spark.sql("OPTIMIZE Products") spark.sql("VACUUM Products RETAIN 168 HOURS") # 7 days

Delta Lake has a safety check to prevent you from running a dangerous VACUUM command. You can’t set a retention period lower than 168 hours in Databricks Serverless SQL because it restricts unsafe file deletions to prevent data corruption, and serverless environments don’t allow overriding this safety check.

Note: If you run VACUUM on a delta table, you lose the ability to time travel back to a version older than the specified data retention period.

Clean up

If you’ve finished exploring Azure Databricks, you can delete the resources you’ve created to avoid unnecessary Azure costs and free up capacity in your subscription.