Explore Content Safety in Azure AI Foundry

Azure AI services help users create AI applications with out-of-the-box and pre-built and customizable APIs and models. In this exercise you will take a look at one of the services, Azure AI Content Safety, which enables you to moderate text and image content. In Azure AI Foundry portal, Microsoft’s platform for creating intelligent applications, you will use Azure AI Content Safety to categorize text and assign it severity score.

Note The goal of this exercise is to get a general sense of how Azure AI services are provisioned and used. Content Safety is used as an example, but you are not expected to gain a comprehensive knowledge of content safety in this exercise!

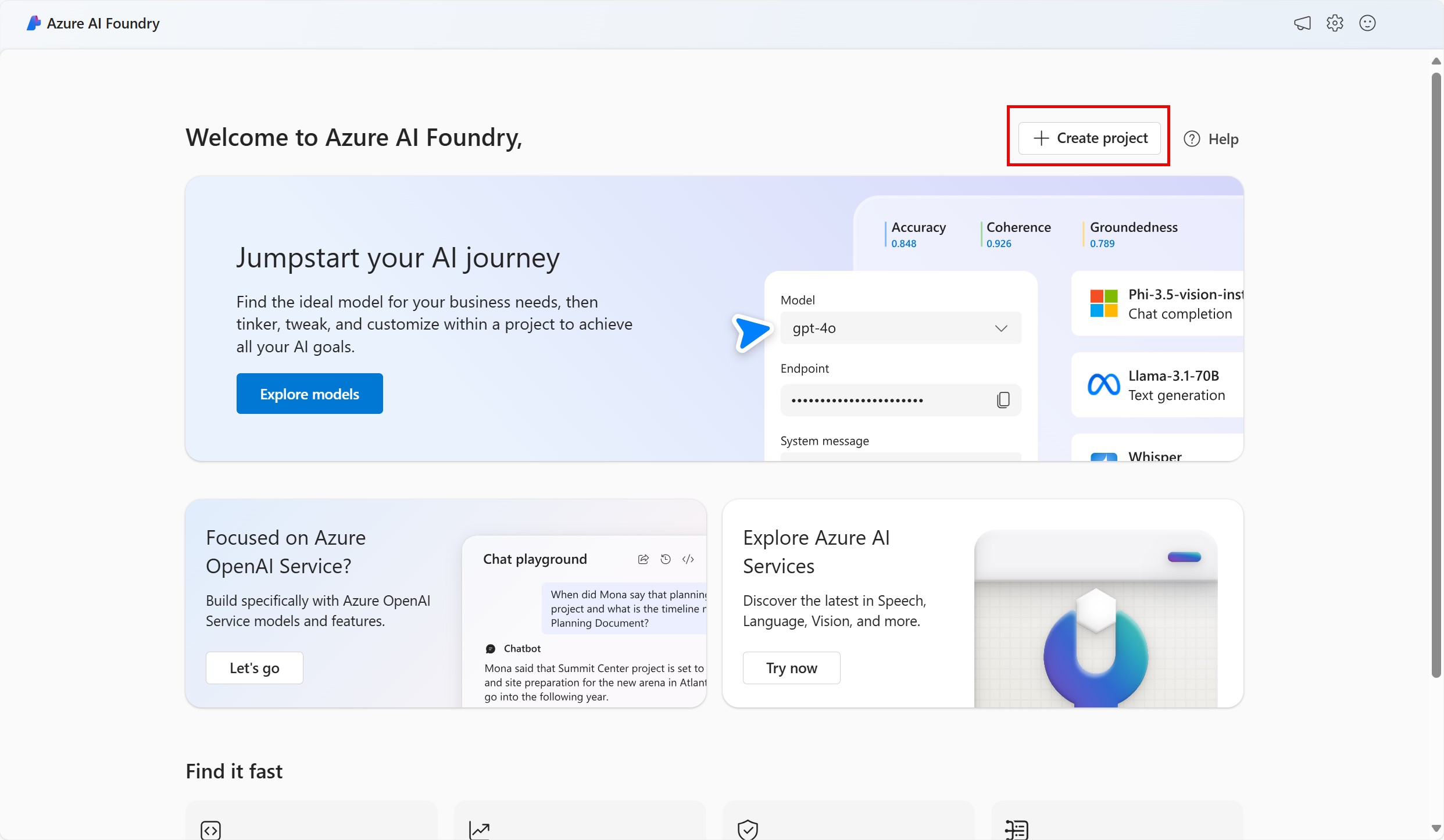

Create a project in Azure AI Foundry portal

-

In a browser tab, navigate to the Azure AI Foundry portal.

-

Sign in with your account.

-

On the Azure AI Foundry portal home page, select Create a project. In Azure AI Foundry, projects are containers that help organize your work.

-

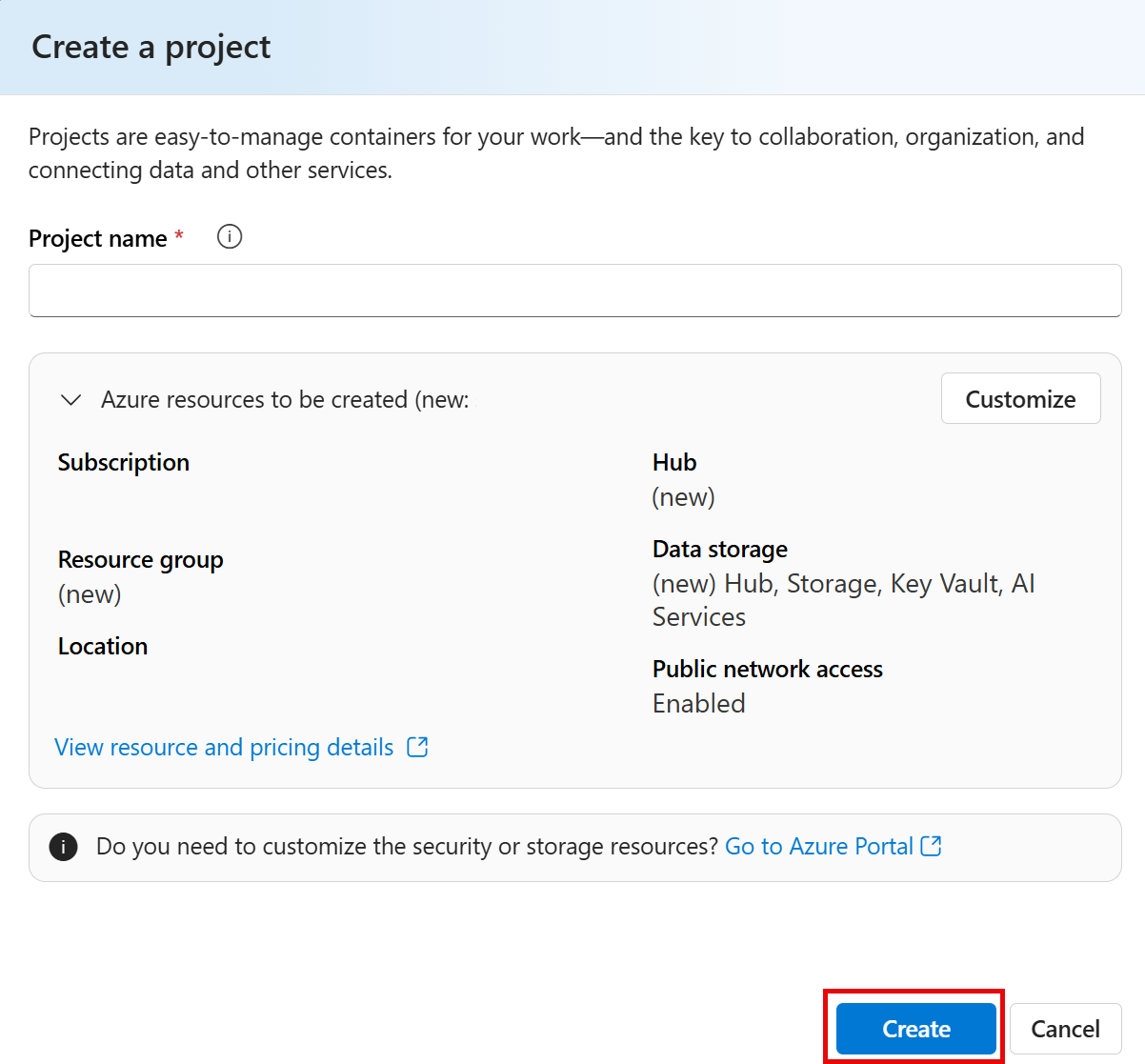

On the Create a project pane, you will see a generated project name, which you can keep as-is. Depending on whether you have created a hub in the past, you will either see a list of new Azure resources to be created or a drop-down list of existing hubs. If you see the drop-down list of existing hubs, select Create new hub, create a unique name for your hub, and select Next.

Important: You will need an Azure AI services resouce provisioned in a specific location to complete the rest of the lab.

-

In the same Create a project pane, select Customize and select one of the following Locations: East US, France Central, Korea Central, West Europe, or West US to complete the rest of the lab. Then select create.

- Take note of the resources that are created:

- Azure AI services

- Azure AI hub

- Azure AI project

- Storage account

- Key vault

- Resource group

-

After the resources are created, you will be brought to your project’s Overview page.

-

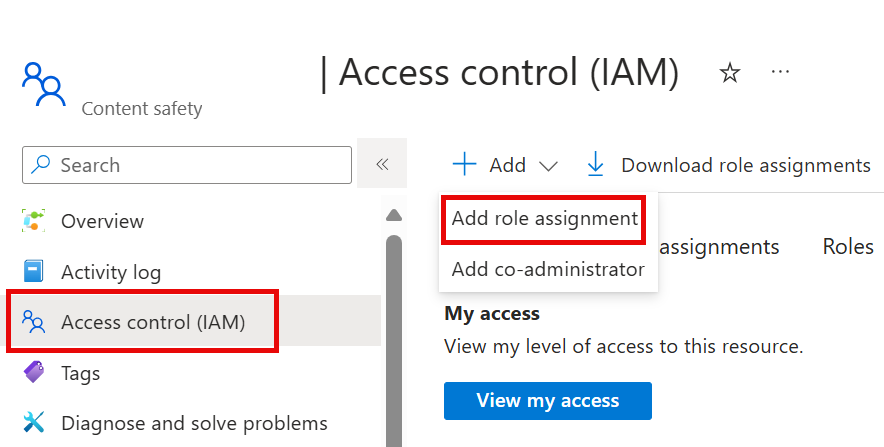

In order to use Content Safety, you need to make a permissions update to your Azure AI hub resource. To do this, open the Azure portal and log in with the same subscription you used to create your AI Foundry resources.

-

In the Azure portal, use the search bar at the top of the page to look for and select Azure AI Foundry. In the resource page, select the resource you just created that is type Azure AI hub.

- In the Azure portal, on the left-hand pane, select Access Control (IAM). Then on the open pane, select Add next to the plus sign, and select Add role assignment.

-

Search for Azure AI Safety Evaluator in the list of roles, and select it. Then select Next.

- Use the following settings to assign yourself to the role:

- Assign access to: select user, group, or service principal

- Members: click on select members

- On the open Select members pane, find your name. Click on the plus icon next to your name. Then click Select.

- Description: leave blank

-

Select Review and Assign, then select Review and Assign again to add the role assignment.

-

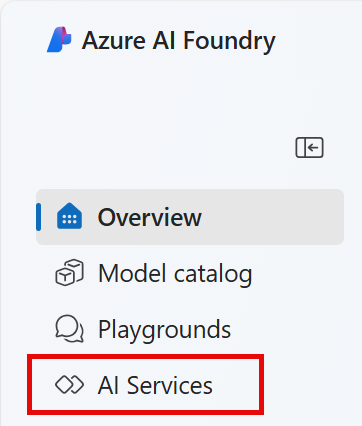

In your browser, return to the Azure AI Foundry portal. Select your project.

-

On the left-hand menu on the screen, select AI Services.

-

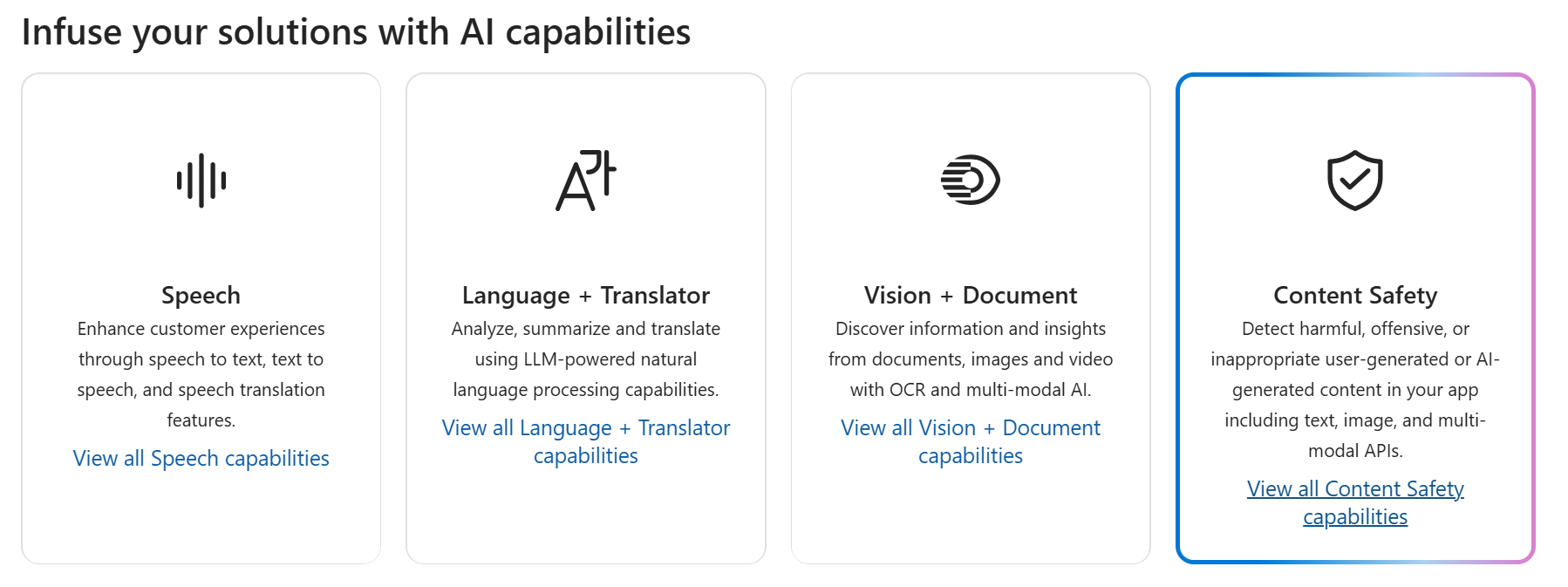

On the AI Services page, select the Vision + Document tile to try out Azure AI Vision and Document capabilities.

Try out text moderation with Content Safety in Azure AI Foundry portal

-

On the Content Safety page, under Filter text content, select Moderate text content.

-

On the Moderate text content page, under the Try it out heading, select the Azure AI services resource you just created from the drop down menu.

-

Under Run a simple test, select the Safe Content tile. Notice that text is displayed in the box below.

-

Click Run test. Running a test calls the Content Safety Service’s deep learning model. The deep learning model has already been trained to recognize un-safe content.

-

In the Results panel, inspect the results. There are four severity levels from safe to high, and four types of harmful content. Does the Content Safety AI service consider this sample to be acceptable or not? What’s important to note is that the results are within a confidence interval. A well-trained model, like one of Azure AI’s out-of-the-box models, can return results that have a high probability of matching what a human would label the result. Each time you run a test, you call the model again.

-

Now try another sample. Select the text under Violent content with misspelling. Check that the content is displayed in the box below.

-

Click Run test and inspect the results in the Results panel again.

You can run tests on all the samples provided, then inspect the results.

Clean-up

If you don’t intend to do more exercises, delete any resources that you no longer need. This avoids accruing any unnecessary costs.

- Open the Azure portal and select the resource group that contains the resource you created.

- Select the resource and select Delete and then Yes to confirm. The resource is then deleted.

Learn more

This exercise demonstrated only some of the capabilities of the Content Safety service. To learn more about what you can do with this service, see the Content Safety page.