Explore computer vision

In this exercise, you’ll use a chat playground to interact with a generative AI solution that can analyze and interpret images. The goal of this exercise is to explore a common pattern for combining text and visual input in a prompt for a generative AI model.

This exercise should take approximately 15 minutes to complete.

Prepare for image-based chat

In this exercise you use a generative AI model in a chat playground to respond to prompts that include image data.

Note: The chat application uses the Microsoft Phi 3 Mini model small language model to interpret and respond to prompts. Many modern language models are multimodal - in other words, they support multiple formats of input, including text and images. However, the Microsoft Phi 3 model is text-based only, so we’ll also use the the MobileNetV3 CNN-based computer vision model for image classification and include the predicted image class in the prompt. The models will run in your browser, on your local computer. Performance may vary depending on the available memory in your computer and your network bandwidth to download the models. If WebLLM models are not supported in your browser, a fallback mode with reduced functionality will be enabled. After opening the app, use the ? (About this app) icon in the chat area to find out more.

-

In a web browser, open the Chat Playground at

https://aka.ms/chat-playground.The app intiializes by downloading a language model.

Tip: The first time you open the chat playground, it may take a few minutes for the model to download. Subsequent downloads will be faster.

- While waiting for the model to download, open a new browser tab, and download images.zip from

https://aka.ms/ai-imagesto your local computer. - Extract the downloaded archive in a local folder to see the files it contains. These files are the images you will use AI to analyze.

-

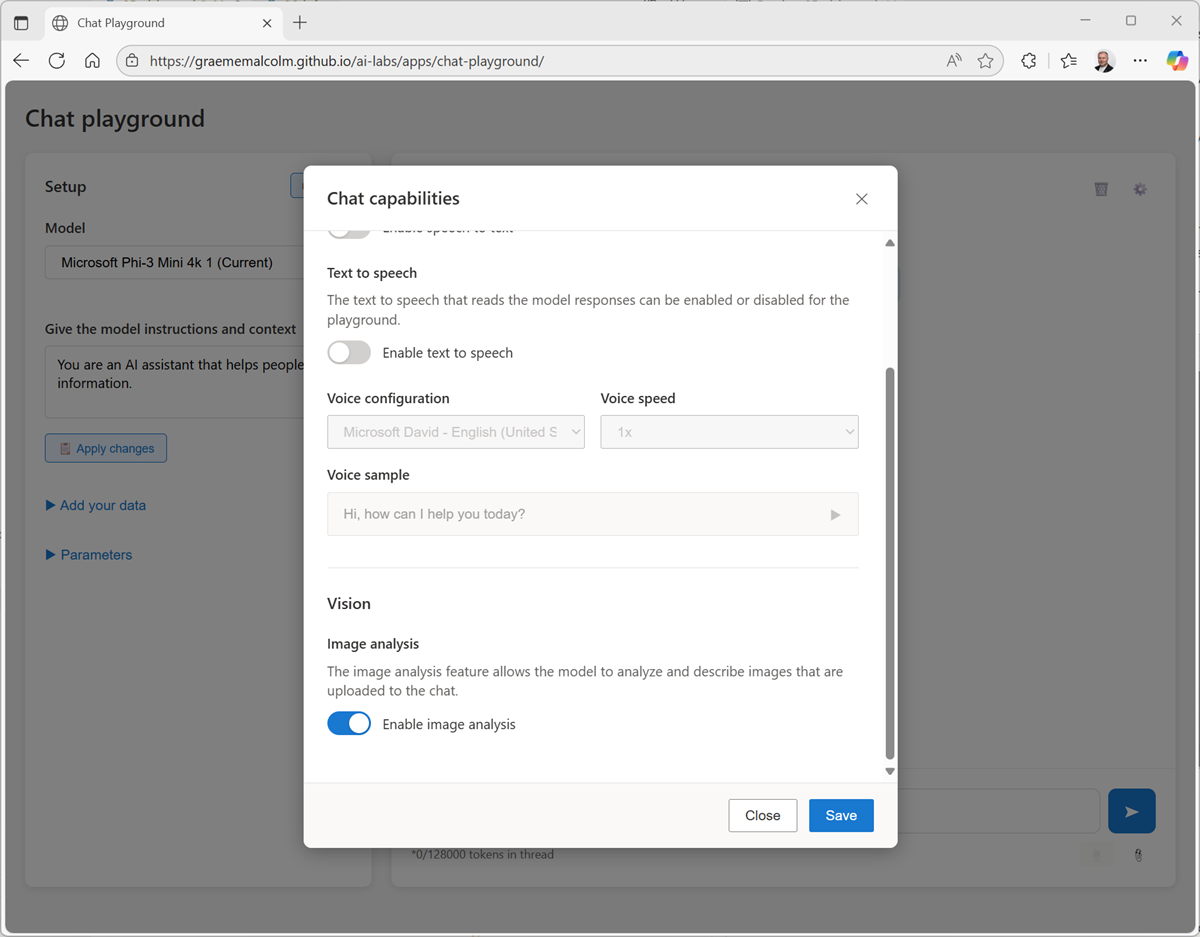

Return to the browser tab containing the chat playground and ensure the language model has downloaded (or if it failed to download, the model has been set to the “None” fallback). Then, in the configuration pane on the left pane, in the Vision section, enable Image analysis and wait for the computer vision model to be downloaded and initialized.

In the chat interface, an Upload image (📎) button is enabled.

-

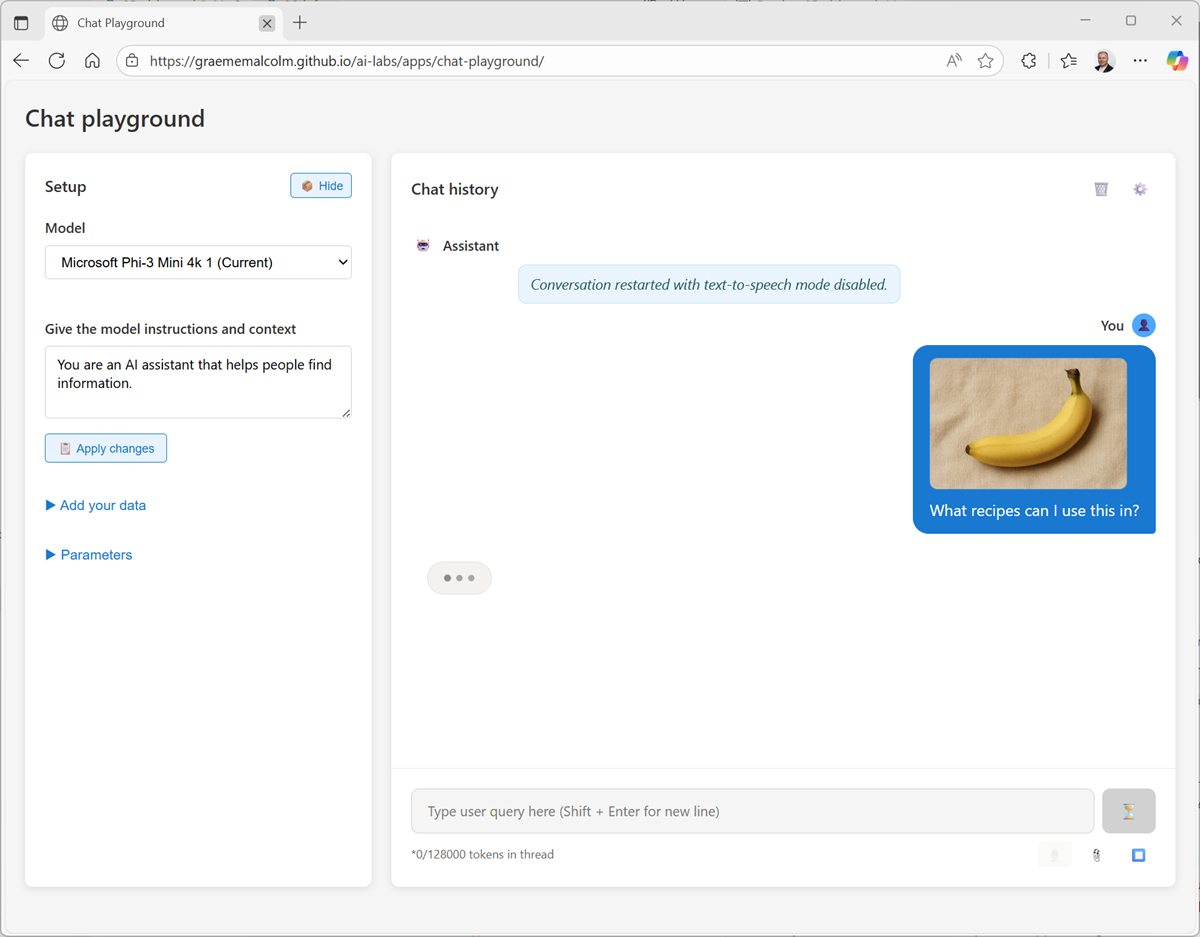

Click the Upload image button, and browse to select one of the images you extracted on your computer.

A thumbnail of the image is added to the prompt input area.

-

Enter a prompt like

Suggest a recipe for this.. The image is included in the message.

The MobileNetV3 model is used to determine the likely subject of the image, and the results of that analysis is included in the prompt to the Phi language model. The result should be a reponse that uses the image information to answer the question.

-

Submit prompts that include the other images, such as

How should I cook this?orWhat desserts could I make with this?Note: When using the “None” fallback model, the results depend on Wikipedia search results based on keywords extracted from the prompt and the class name predicted by the image classification model. The relevance of results may vary!

- If you want to explore further, you can upload your own images and enter appropriate prompts. The combination of a small language model (or simple keyword search in Wikipedia) and a limited computer vision model means that the quality of the responses may be highly variable compared to a true multimodal large language model!

Summary

In this exercise, you explored the use of computer vision with a generative AI model in a chat playground.

The app used in this exercise is based on a simplified version of the chat playground in the Microsoft Foundry portal. Microsoft Foundry supports a range of multimodal models that can accept combined image and text input, enabling significantly more complex image interpretation than this simple example. Additionally, you can use the Azure Content Understanding tool to analyze images.