Develop a multi-agent solution (deprecated)

In this exercise, you’ll practice using the sequential orchestration pattern in the Microsoft Agent Framework SDK. You’ll create a simple pipeline of three agents that work together to process customer feedback and suggest next steps. You’ll create the following agents:

- The Summarizer agent will condense raw feedback into a short, neutral sentence.

- The Classifier agent will categorize the feedback as Positive, Negative, or a Feature request.

- Finally, the Recommended Action agent will recommend an appropriate follow-up step.

You’ll learn how to use the Microsoft Agent Framework SDK to break down a problem, route it through the right agents, and produce actionable results. Let’s get started!

This exercise should take approximately 30 minutes to complete.

Note: Some of the technologies used in this exercise are in preview or in active development. You may experience some unexpected behavior, warnings, or errors.

Deploy a model in a Microsoft Foundry project

Let’s start by creating a Foundry project.

-

In a web browser, open the Foundry portal at

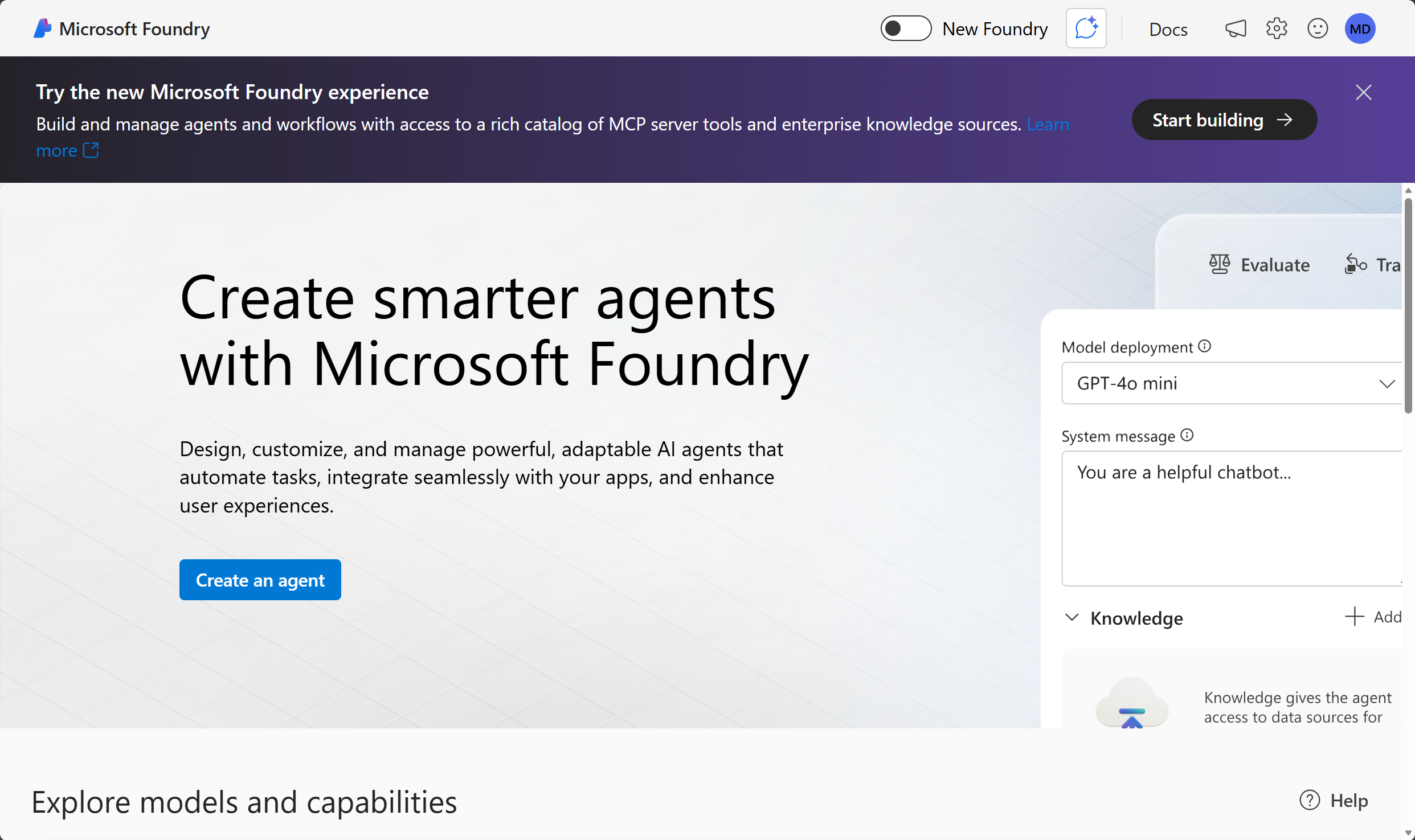

https://ai.azure.comand sign in using your Azure credentials. Close any tips or quick start panes that are opened the first time you sign in, and if necessary use the Foundry logo at the top left to navigate to the home page, which looks similar to the following image (close the Help pane if it’s open):

Important: For this lab, you’re using the New Foundry experience.

- In the top banner, select Start building to try the new Microsoft Foundry Experience.

- When prompted, create a new project, and enter a valid name for your project.

- Expand Advanced options and specify the following settings:

- Foundry resource: A valid name for your Foundry resource

- Subscription: Your Azure subscription

- Resource group: Select your resource group, or create a new one

- Region: Select any AI Foundry recommended**

* Some Azure AI resources are constrained by regional model quotas. In the event of a quota limit being exceeded later in the exercise, there’s a possibility you may need to create another resource in a different region.

-

Select Create and wait for your project to be created.

-

After your project is created, select Build from the navigation bar.

-

Select Models from the left-hand menu, and then select Deploy a base model.

-

Enter gpt-4.1 in the search box, and then select the gpt-4.1 model from the search results.

-

Select Deploy with the default settings to create a deployment of the model.

After the model is deployed, the playground for the model is displayed.

-

In the navigation bar on the left, select Microsoft Foundry to return to the Foundry home page.

- Copy the Project endpoint value to a notepad, as you’ll use them to connect to your project in a client application.

Create an AI Agent client app

Now you’re ready to create a client app that uses the Microsoft Agent Framework SDK to implement your multi-agent solution.

Prepare the environment

-

Open a new browser tab (keeping the Foundry portal open in the existing tab). Then in the new tab, browse to the Azure portal at

https://portal.azure.com; signing in with your Azure credentials if prompted.Close any welcome notifications to see the Azure portal home page.

-

Use the [>_] button to the right of the search bar at the top of the page to create a new Cloud Shell in the Azure portal, selecting a PowerShell environment with no storage in your subscription.

The cloud shell provides a command-line interface in a pane at the bottom of the Azure portal. You can resize or maximize this pane to make it easier to work in.

Note: If you have previously created a cloud shell that uses a Bash environment, switch it to PowerShell.

-

In the cloud shell toolbar, in the Settings menu, select Go to Classic version (this is required to use the code editor).

Ensure you've switched to the classic version of the cloud shell before continuing.

-

In the cloud shell pane, enter the following commands to clone the GitHub repo containing the code files for this exercise (type the command, or copy it to the clipboard and then right-click in the command line and paste as plain text):

rm -r ai-agents -f git clone https://github.com/MicrosoftLearning/mslearn-ai-agents ai-agentsTip: As you enter commands into the cloud shell, the output may take up a large amount of the screen buffer and the cursor on the current line may be obscured. You can clear the screen by entering the

clscommand to make it easier to focus on each task. -

When the repo has been cloned, enter the following command to change the working directory to the folder containing the code files and list them all.

cd ai-agents/Labfiles/05-agent-orchestration/Python ls -a -lThe provided files include application code and a file for configuration settings.

Configure the application settings

-

In the cloud shell command-line pane, enter the following command to install the libraries you’ll use:

python -m venv labenv ./labenv/bin/Activate.ps1 pip install agent-framework==1.0.0b260128 --pre -

Enter the following command to edit the configuration file that is provided:

code .envThe file is opened in a code editor.

-

In the code file, replace the your_project_endpoint placeholder with the endpoint for your project (copied from the project Overview page in the Foundry portal) and ensure that the AZURE_AI_MODEL_DEPLOYMENT_NAME variable is set to your model deployment name (which should be gpt-4.1).

-

After you’ve replaced the placeholders, use the CTRL+S command to save your changes and then use the CTRL+Q command to close the code editor while keeping the cloud shell command line open.

Create AI agents

Now you’re ready to create the agents for your multi-agent solution! Let’s get started!

-

Enter the following command to edit the agents.py file:

code agents.py -

At the top of the file under the comment Add references, and add the following code to reference the namespaces in the libraries you’ll need to implement your agent:

# Add references import asyncio from typing import cast from agent_framework import ChatMessage, Role, SequentialBuilder, WorkflowOutputEvent from agent_framework.azure import AzureAIAgentClient from azure.identity import AzureCliCredential -

In the main function, take a moment to review the agent instructions. These instructions define the behavior of each agent in the orchestration.

-

Add the following code under the comment Create the chat client:

# Create the chat client credential = AzureCliCredential() async with ( AzureAIAgentClient(credential=credential) as chat_client, ):Note that the AzureCliCredential object will allow your code to authenticate to your Azure account. The AzureAIAgentClient object will automatically include the Foundry project settings from the .env configuration.

-

Add the following code under the comment Create agents:

(Be sure to maintain the indentation level)

# Create agents summarizer = chat_client.as_agent( instructions=summarizer_instructions, name="summarizer", ) classifier = chat_client.as_agent( instructions=classifier_instructions, name="classifier", ) action = chat_client.as_agent( instructions=action_instructions, name="action", )

Create a sequential orchestration

-

In the main function, find the comment Initialize the current feedback and add the following code:

(Be sure to maintain the indentation level)

# Initialize the current feedback feedback=""" I use the dashboard every day to monitor metrics, and it works well overall. But when I'm working late at night, the bright screen is really harsh on my eyes. If you added a dark mode option, it would make the experience much more comfortable. """ -

Under the comment Build a sequential orchestration, add the following code to define a sequential orchestration with the agents you defined:

# Build sequential orchestration workflow = SequentialBuilder().participants([summarizer, classifier, action]).build()The agents will process the feedback in the order they are added to the orchestration.

-

Add the following code under the comment Run and collect outputs:

# Run and collect outputs outputs: list[list[ChatMessage]] = [] async for event in workflow.run_stream(f"Customer feedback: {feedback}"): if isinstance(event, WorkflowOutputEvent): outputs.append(cast(list[ChatMessage], event.data))This code runs the orchestration and collects the output from each of the participating agents.

-

Add the following code under the comment Display outputs:

# Display outputs if outputs: for i, msg in enumerate(outputs[-1], start=1): name = msg.author_name or ("assistant" if msg.role == Role.ASSISTANT else "user") print(f"{'-' * 60}\n{i:02d} [{name}]\n{msg.text}")This code formats and displays the messages from the workflow outputs you collected from the orchestration.

-

Use the CTRL+S command to save your changes to the code file. You can keep it open (in case you need to edit the code to fix any errors) or use the CTRL+Q command to close the code editor while keeping the cloud shell command line open.

Sign into Azure and run the app

Now you’re ready to run your code and watch your AI agents collaborate.

-

In the cloud shell command-line pane, enter the following command to sign into Azure.

az loginYou must sign into Azure - even though the cloud shell session is already authenticated.

Note: In most scenarios, just using az login will be sufficient. However, if you have subscriptions in multiple tenants, you may need to specify the tenant by using the –tenant parameter. See Sign into Azure interactively using the Azure CLI for details.

-

When prompted, follow the instructions to open the sign-in page in a new tab and enter the authentication code provided and your Azure credentials. Then complete the sign in process in the command line, selecting the subscription containing your Foundry hub if prompted.

-

After you have signed in, enter the following command to run the application:

python agents.pyYou should see some output similar to the following:

------------------------------------------------------------ 01 [user] Customer feedback: I use the dashboard every day to monitor metrics, and it works well overall. But when I'm working late at night, the bright screen is really harsh on my eyes. If you added a dark mode option, it would make the experience much more comfortable. ------------------------------------------------------------ 02 [summarizer] User requests a dark mode for better nighttime usability. ------------------------------------------------------------ 03 [classifier] Feature request ------------------------------------------------------------ 04 [action] Log as enhancement request for product backlog. -

Optionally, you can try running the code using different feedback inputs, such as:

I use the dashboard every day to monitor metrics, and it works well overall. But when I'm working late at night, the bright screen is really harsh on my eyes. If you added a dark mode option, it would make the experience much more comfortable.I reached out to your customer support yesterday because I couldn't access my account. The representative responded almost immediately, was polite and professional, and fixed the issue within minutes. Honestly, it was one of the best support experiences I've ever had.

Summary

In this exercise, you practiced sequential orchestration with the Microsoft Agent Framework SDK, combining multiple agents into a single, streamlined workflow. Great work!

Clean up

If you’ve finished exploring Azure AI Agent Service, you should delete the resources you have created in this exercise to avoid incurring unnecessary Azure costs.

-

Return to the browser tab containing the Azure portal (or re-open the Azure portal at

https://portal.azure.comin a new browser tab) and view the contents of the resource group where you deployed the resources used in this exercise. -

On the toolbar, select Delete resource group.

-

Enter the resource group name and confirm that you want to delete it.